AI’s Growing Threat

You may have seen the news about Taylor Swift taking action against AI-generated nude photos of her spreading all over the internet. This controversy is alarming. Some online comments suggest she is overreacting, attributing it to the price of being a celebrity. The reality is not a laughing matter, as it can happen to anyone active on social media without their consent, and currently, there are few consequences for the cybercriminals responsible for creating and posting these false images and stories at someone else’s expense. The good news is that Taylor is taking swift action by not allowing her image to be dragged into this AI rabbit hole without some kind of solution or accountability. Despite the unfortunate incident, Taylor has a voice that is heard, making her an advocate for addressing this growing problem of deep fakes and arguing for solutions to help other victims.

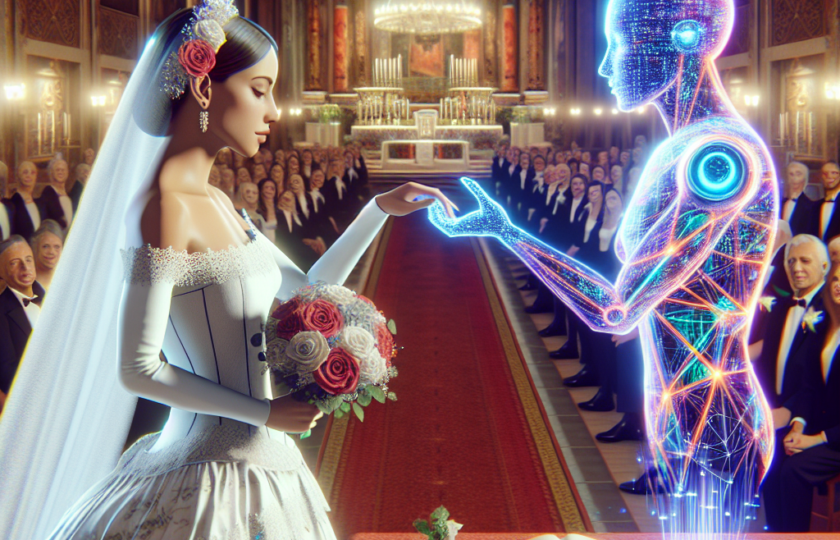

Deep fakes refer to manipulated media, often using artificial intelligence techniques to create or alter content that appears authentic but is actually fabricated. This post aims to shed light on the threat posed by deep fakes and explore strategies to prevent their harmful effects. Posting misinformation and fake photos of people can cause serious damage and a blow to someone’s self-esteem and moral character.

In 2019, a report released by the AI firm DeepTrace Labs showed these images were overwhelmingly weaponized against women. Most of the victims, it said, were Hollywood actors and South Korean K-pop singers.

Prevention Strategies

Understanding the Threat

Deep fakes have the potential to cause significant harm in various ways. They can be used to spread misinformation, manipulate public opinion, damage reputations, and invade privacy. The ease of access to powerful AI tools and the vast amount of available data make it increasingly challenging to distinguish between real and fake content. This is dangerous, especially considering we are in the midst of an election and global conflicts. News, media, and content need to be as relevant, clean, and truthful as possible to avoid serious consequences to human life.

Raising Awareness

Education and awareness play a crucial role in combating the threat of deep fakes. By informing the public about the existence and potential dangers of deep fakes, individuals can become more vigilant and critical consumers of media. Promoting media literacy and teaching people how to identify signs of manipulation can help mitigate the impact of deep fakes. Many social media companies and major players in the tech industry scroll through content daily and try to eliminate content that violates their platform’s policies.

For the most effective results, companies need to train, certify, or hire specific staff to review content being spread on these platforms. They should also mark deep fakes with a symbol that indicates that the content is not authentic.

Technological Solutions

Developing advanced technologies to detect and combat deep fakes is essential. Researchers are actively working on algorithms and tools that can identify manipulated content. These technologies can analyze facial inconsistencies, unnatural movements, or artifacts that indicate tampering. Collaboration between tech companies, researchers, and policymakers is crucial to accelerating the development and deployment of such solutions. Essentially, we can use AI technologies to combat AI-generated deep fakes by filtering through content faster than humans and eliminating deep fakes before they become a threat or show up on feeds.

Authenticity Verification

Implementing robust systems for verifying the authenticity of media content can help prevent the spread of deep fakes. This can involve the use of digital signatures, watermarking, or blockchain technology to ensure the integrity and origin of media files. By providing users with a reliable way to verify the authenticity of content, the impact of deep fakes can be minimized.

Legal Frameworks

Establishing clear legal frameworks to address the creation and dissemination of deep fakes is vital. Laws should be updated to address the unique challenges posed by deep fakes, including privacy violations, defamation, and intellectual property infringement. Collaboration between governments, technology companies, and legal experts is necessary to develop comprehensive legislation that can deter the creation and distribution of deep fakes. One of the challenges personally seen with AI is that it is advancing faster than the laws created for it. We need to slow down the advances until a set of laws and boundaries are established.

“Computers can reproduce the image of a dead or living person,” says Daniel Gervais, a law professor at Vanderbilt University who specializes in intellectual property law. “In the case of a living person, the question is whether this person will have rights when his or her image is used.” (Currently, only nine U.S. states have laws against non-consensual deep fake photography.) – MSN

Responsible Media Practices

Media organizations and social media platforms have a crucial role to play in preventing the spread of deep fakes. Implementing stricter content moderation policies, fact-checking mechanisms, and user reporting systems can help identify and remove deep fake content promptly. Encouraging responsible media practices and promoting ethical guidelines within the industry can also reduce the impact of deep fakes. Platforms can verify profiles and users’ identities by allowing only one profile to be established per IP address and business. One of the biggest problems other platforms face is they allow one user to create 100 profiles. Then, misinformation and deep fake materials spread rapidly making these materials a challenge to control. It is crucial for individuals, organizations, and policymakers to work together to protect the integrity of digital content and ensure a safer online environment for all.